Mathematics of Information and Data Science

What we do

MINDS (Mathematics of Information and Data Science) is our research group based in the School of Mathematics and Computer Science and part of the Maxwell Institute for Mathematical Sciences in Edinburgh. Our mission is “to enable the reliable transformation of data into information through the development of efficient and rigorous mathematical and computational techniques, with special attention to impactful applications that generate social and economic benefit.”

Data Science is an all-encompassing field that deals with the vast amount of data that has become available in recent days. The challenge is to /efficiently/ transform such raw data into meaningful information that can aid the strategic decision making of practitioners in different applications and industries. Dealing with this challenge requires the development of methods that can effectively handle large amounts of data or extract the maximum amount of information from a small amount of data. The development of these methods requires deep understanding of mathematical and statistical concepts in Data Science and complicated analysis which drives the development and proves the efficacy of these methods. Data Science has applications and significant impact in many fields such as computational imaging, bioinformatics, audio processing, text processing, finance, etc…. and these applications give the framework for such method developments.

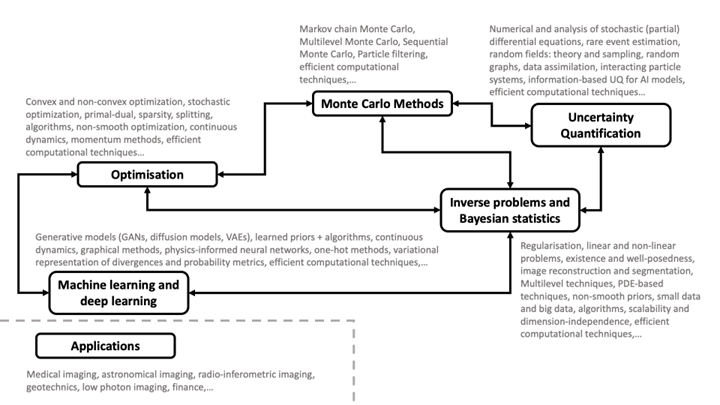

With our members and collaborators, MINDS is uniquely positioned to tackle these aspects ---methodologies, analysis and applications--- and our goal is drive research into all three aspects in a cohesive manner where each of these aspects informs and is guided by the other two. MINDS focuses on high-impact applications in various engineering and scientific fields, with a particular focus on imaging and finance, and the following four five research directions:

Deep Learning generative modelling

The revolutionary Generative adversarial networks (designed by I. Goodfellow et al in 2014), also known as GANs, constitute a class of distribution-learning Machine Learning methods that exploit both generative models and deep neural networks. GANs are formulated as a two-player zero-sum game between a generator and discriminator, both represented by neural networks. Such a game can be mathematically viewed as minimisation problem of a divergence or probabilistic metric which is represented through its variational formula. GANs have been successfully employed in many applications such as molecular biology, discovery of new molecules, cosmology, climate change, computer vision and natural languages. However, training GANs can be unstable, e.g., mode collapse, for reasons that are not well-understood, and the available data and expert knowledge often induce conditional dependencies between model components. At MINDS, we develop the theoretical foundations for better understanding of these issues, create new GANs or other distribution-learning methods such as particle-based methods and design training methods for generative models that embody the underlying graph structure and expose their statistical and computational advantages.

Uncertainty Quantification

Due to their high expressive power, stochastic models are often used to model complicated phenomena. This high modelling power comes with significant complexity which makes interrogating the models and distilling them into easy-to-digest statistics challenging, particularly so for applications involving complex models. Additionally, the stochastic models, when data is incorporated, imply uncertainty in the model outputs and intelligent decision making requires quantifying such uncertainty. This forms the core of Uncertainty Quantification and is a major focus of MINDS.

Inverse Problems and Bayesian Statistics

An inverse problem consists in the estimation of parameters in mathematical models subject to observational, often noisy, data. Inverse problems are challenging; there are, e.g., statistical challenges (noise), mathematical challenges (underdetermined/ill-posed problems), and computational challenges. In Bayesian statistics, the unknown parameter is represented as a random variable and data is used to adjust this random variable to accurately represent the information and uncertainty regarding the parameter. While this approach solves issues with noise and inherit ill-posedness of the inverse problem, the computational challenges become much more pronounced. In MINDS, we focus on the solution and analysis of inverse problems and Bayesian inverse problems.

Monte Carlo Methods

Monte Carlo methods, Markov chain Monte Carlo, Multilevel Monte Carlo, Sequential Monte Carlo, Particle filtering are popular computational methods that are used in Uncertainty Quantification, Inverse Problems and even optimization. These methods are easy to use and parallelize and work particularly well for high dimensional problems. Nevertheless, they are considered computationally inefficient. At MINDS, we focus on developing Monte Carlo-based methods and computational techniques that exploit the underlying structure of a wide range of problems to increase their efficiency.

Optimization

Optimisation is ubiquitous for solving many problems arising in data science, including many of the problems described above. It is generally used to find a minimiser (or maximiser) of an objective function, often under constraints. For instance, for inverse problems, the maximum a posteriori estimate corresponds to the minimiser of the sum of a data-fidelity term and a regularisation term. In deep learning, neural networks are trained by minimising a loss, with regularisation to handle non-smooth losses and constraints to ensure valid solutions. In MINDS, we develop sophisticated optimisation algorithms that enable minimising non-smooth, non-convex objectives, subject to constraints. We focus on proximal, deep learning and fixed-point approaches, and use several analytical tools to develop such algorithms such as stochastic optimisation, maximally monotone operator theory and Kurdyka-Lojasiewicz properties.

Group Members

Staff:

Dr Panagiota Birmpa, Dr Paul Dobson, Dr Abdul-Lateef Haji-Ali, Dr Marcelo Pererya, Dr Audrey Repetti, Prof George Steffaris

Research Fellows and Associates:

Cecilia Tarpau, David Thong

Students:

Charlesquin Kemajou Mbakam, Teresa Klatzer, Alix Leroy, Savvas Melidonis, Iain Powell, Jonny Spence, Michael Tang, Simon Urbainczyk